See other Science/Tech Articles

Title: Visualizing AI vs. Human Performance In Technical Tasks

Source:

[None]

URL Source: https://www.zerohedge.com/technolog ... an-performance-technical-tasks

Published: Apr 29, 2025

Author: Tyler Durden

Post Date: 2025-04-29 07:05:05 by Horse

Keywords: None

Views: 37

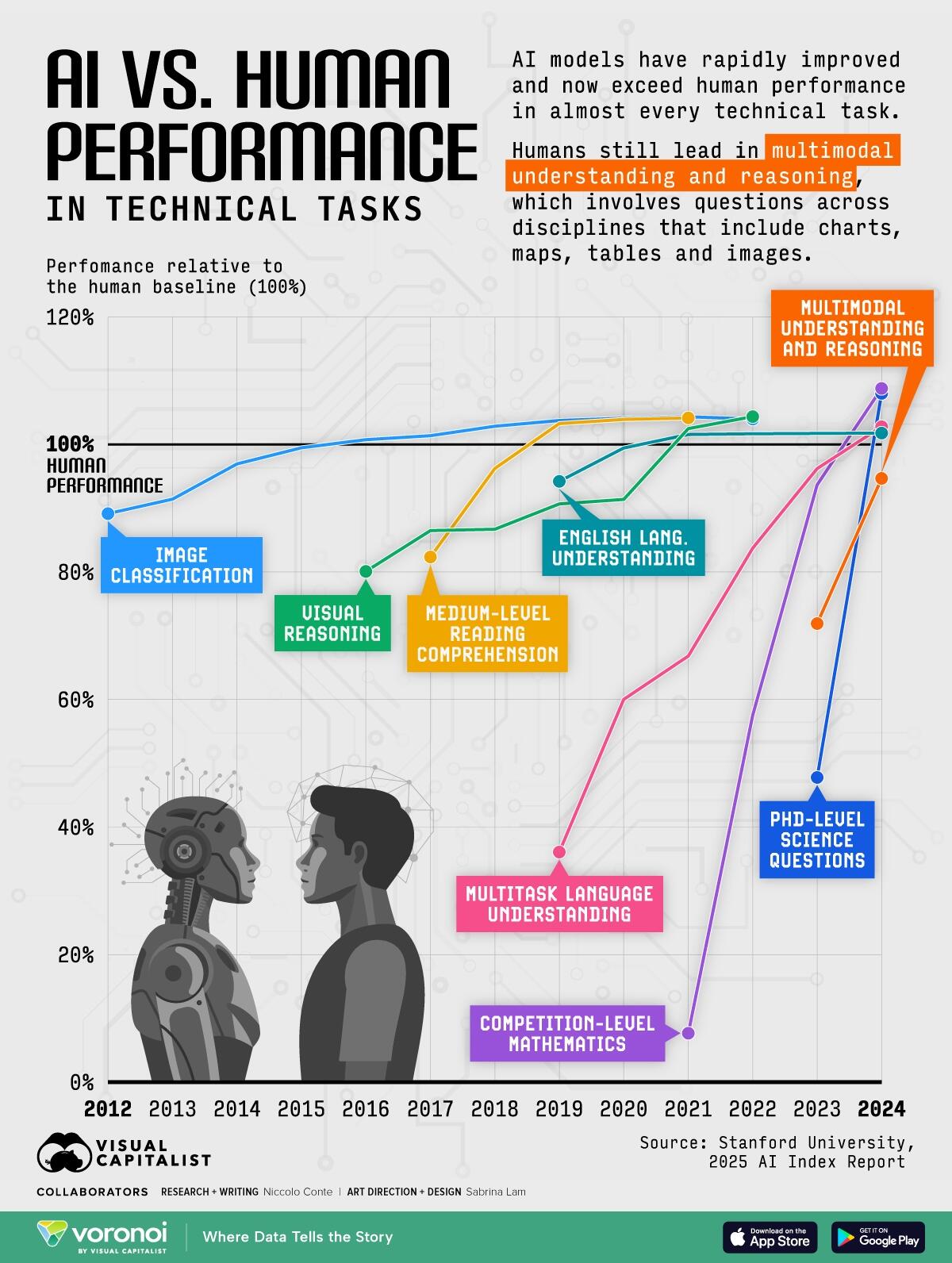

The gap between human and machine reasoning is narrowing...and fast. Over the past year, AI systems have continued to see rapid advancements, surpassing human performance in technical tasks where they previously fell short, such as advanced math and visual reasoning. This graphic, via Visual Capitalist's Kayla Zhu, visualizes AI systems’ performance relative to human baselines for eight AI benchmarks measuring tasks including: Image classification Visual reasoning Medium-level reading comprehension English language understanding Multitask language understanding Competition-level mathematics PhD-level science questions Multimodal understanding and reasoning This visualization is part of Visual Capitalist’s AI Week, sponsored by Terzo. Data comes from the Stanford University 2025 AI Index Report. An AI benchmark is a standardized test used to evaluate the performance and capabilities of AI systems on specific tasks. AI Models Are Surpassing Humans in Technical Tasks Below, we show how AI models have performed relative to the human baseline in various technical tasks in recent years. Year Perfomance relative to the human baseline (100%) Task 2023 47.78% PhD-level science questions 2023 93.67% Competition-level mathematics 2023 96.21% Multitask language understanding 2023 71.91% Multimodal understanding and reasoning 2024 108.00% PhD-level science questions 2024 108.78% Competition-level mathematics 2024 102.78% Multitask language understanding 2024 94.67% Multimodal understanding and reasoning 2024 101.78% English language understanding From ChatGPT to Gemini, many of the world’s leading AI models are surpassing the human baseline in a range of technical tasks. The only task where AI systems still haven’t caught up to humans is multimodal understanding and reasoning, which involves processing and reasoning across multiple formats and disciplines, such as images, charts, and diagrams. However, the gap is closing quickly. In 2024, OpenAI’s o1 model scored 78.2% on MMMU, a benchmark that evaluates models on multi-discipline tasks demanding college-level subject knowledge. This was just 4.4 percentage points below the human benchmark of 82.6%. The o1 model also has one of the lowest hallucination rates out of all AI models. This was major jump from the end of 2023, where Google Gemini scored just 59.4%, highlighting the rapid improvement of AI performance in these technical tasks. To dive into all the AI Week content, visit our AI content hub, brought to you by Terzo. To learn more about the global AI industry, check out this graphic that visualizes which countries are winning the AI patent race. Poster Comment: X AI is the newest entry into AI. It is less biased politically and is superior to all others except Chat GPT which is much older. X AI is getting better daily will soon be the best.

Post Comment Private Reply Ignore Thread